Deep Learning for Gesture Recognition

ProjectGestures are a common and especially alluring method of human-computer interaction. In this project, I experiment with multiple manners of detecting hand gestures within a video stream.

3D Convolutional Neural Network Experiments

Two different 3DCNNs, used for classifying video rather than a 2DCNN which is used on images, were implemented on Pytorch and trained on the nvGesture dataset. The two networks that were implemented were C3D and Resnet 2+1d. Both networks extract spatiotemporal features, useful for classifying gestures.

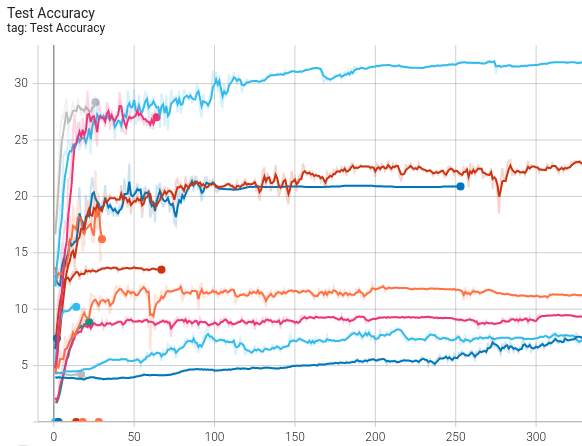

Here, these networks (principally C3D) were used to emulate the results in a 2016 paper by NVIDIA to classify gestures in the nvGesture dataset, a dataset with 25 loosely segmented classes of gestures over 1050 videos. The spatiotemporal features from the neural networks were then inputted into a LSTM layer before classification. As shown below, implementing the networks from literature yielded training results were not as expected, this was still a great introduction to Pytorch by attempting to solve a difficult problem.

Tensorboard results for experiments on the nvGesture dataset

1D Parallelized Convolutional Neural Network

Additionally, a parallelized 1DCNN was implemented to classify dynamic hand gestures in the DHG-14 dataset, using the XYZ coordinates of skeletal points on a hand as detected through softwares such as Mediapipe. This included a real-time inference wrapper around this network. While training and validation accuracies were strong, this suffers from the same segmentation problem as before.

2D Convolutional Neural Network

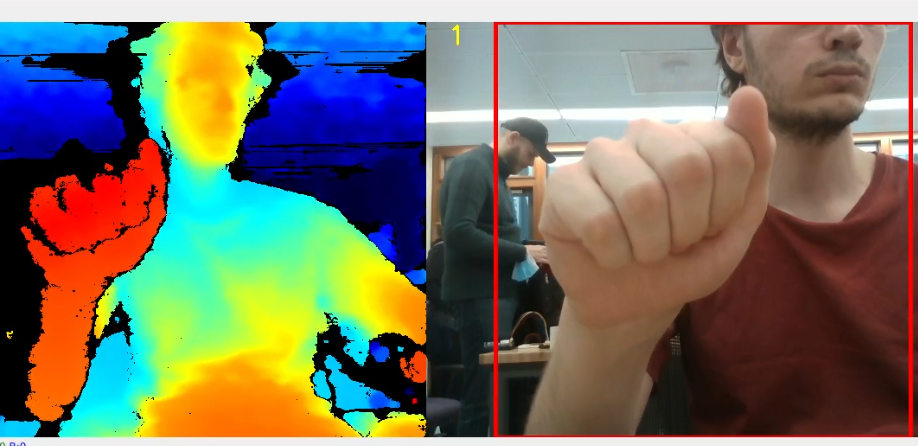

Finally, a dataset of static images of hands in an infrared camera was used to classify static gestures from a leap motion camera. Given the nature of the images, this can be approximated by depth data too, with background removal. A 2D CNN was implemented in Pytorch, with parsing of the dataset files courtesy of kaggle. The implemented classifier works on single frames in a video stream, and is accurate enough to achieve high performance in real time with a framerate of 30 FPS.

An infrared image from the dataset

Controlling the Drone

This project uses a Dji Tello Edu drone, as it provides an easy-to-interface software development kit. Commands that can be sent to the drone are listed in the SDK documentation. Each gesture will correspond to a movement in this SDK.

The final demonstration as of March 17th uses the Depth/Infrared Classifier, to differentiate between 10 static hand gestures in a depth or infrared video stream. While training and validation accuracy exceeds 99% on the training dataset, the real-time recognition capability is significantly diminished - being able to reliably differentiate between four or five of the classes.

This has to do with the input datatype, where the hands in the dataset are clearly segmented opposed to more loosely segmented hands in the live video. (Show picture of dataset image) This is improved by removing pixels with depth values greater than a threshold of 0.5m, though can still stand to be improved.

Controlling the drone is accomplished by sending commands to the drone corresponding to the gesture detected, provided the decision probability is greater than a threshold of 0.7. Once a gesture is found, the next N frames are ignored.

More information and the source code for this project can be found on its GitHub Repository .